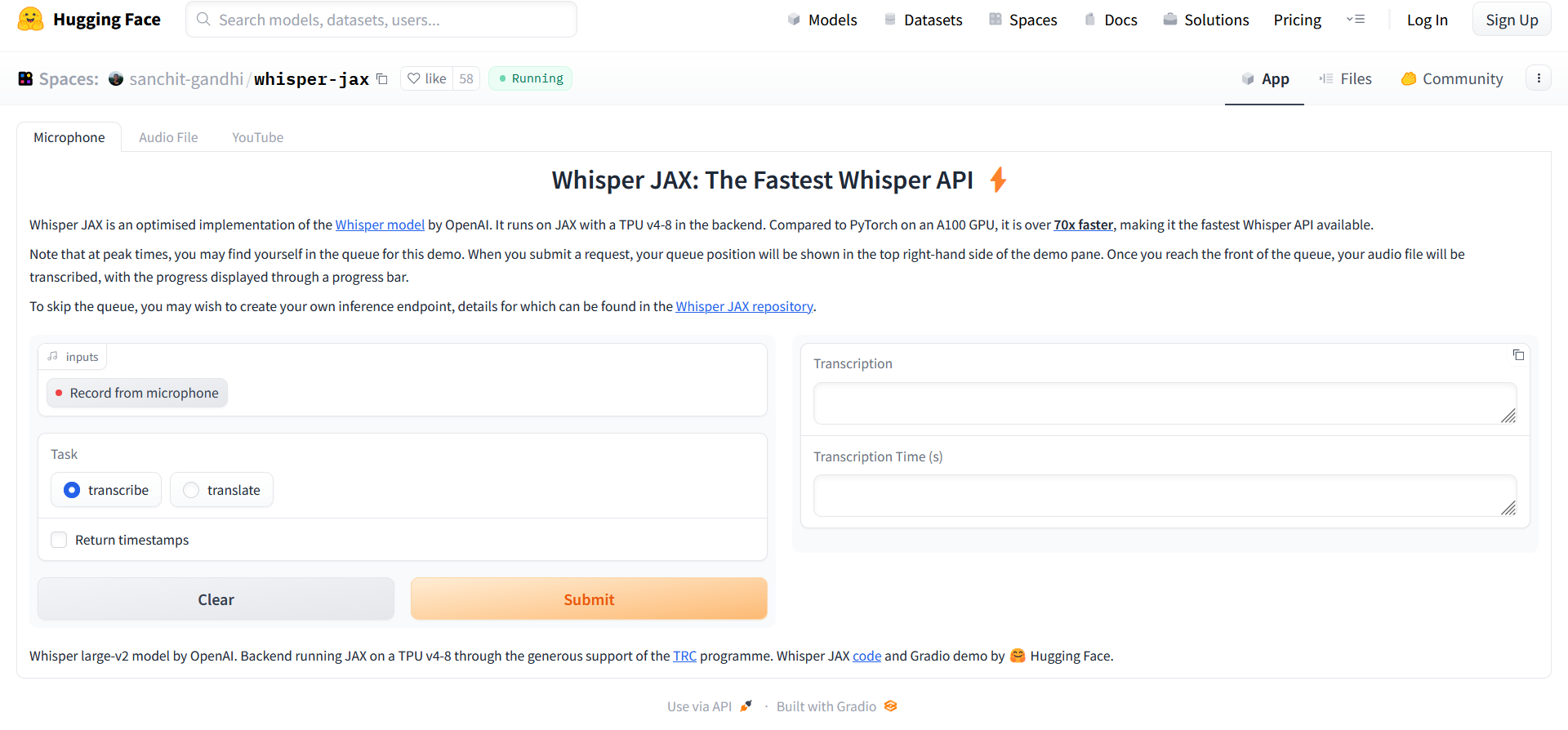

Whisper JAX is an optimised implementation of the Whisper model by OpenAI. It runs on JAX with a TPU v4-8 in the backend. Compared to PyTorch on an A100 GPU, it is over 70x faster, making it the fastest Whisper API available. Key features and advantages include: Fast performance : Over 70x faster than PyTorch on an A100 GPU Optimized implementation : Built on JAX with a TPU v4-8 for maximum efficiency Accurate transcription : Provides accurate transcription of audio files Progress bar : Displays progress of transcription through a progress bar Create your own inference endpoint : To skip the queue, users can create their own inference endpoint using the Whisper JAX repository. Use cases for Whisper JAX include: Transcribing audio files quickly and accurately Improving the efficiency of transcription services Streamlining the transcription process for businesses and individuals.