Researchers at the Massachusetts Institute of Technology (MIT) and Harvard University have conducted an experiment to assess the ability of artificial intelligence (AI) systems to understand cause and effect, a crucial aspect of human intelligence that current AI models struggle with. The experiment, led by Professor Josh Tenenbaum, aimed to go beyond simple pattern recognition, which is the foundation of many popular AI methods such as deep learning. While deep learning algorithms excel at detecting patterns in data and performing tasks like image and voice recognition, they lack the innate abilities of humans, including causal reasoning.

The experiment involved showing AI systems a virtual world with moving objects and asking them questions about the events taking place. While the AI systems performed well in answering descriptive questions about the objects, their performance dropped significantly when asked more complex questions about the causes and effects of the events, demonstrating their limitations in understanding causal relationships. For example, the AI systems could correctly answer questions about the color of an object, but struggled to answer questions about what caused objects to collide or what would happen if they didn't collide.

This lack of causal understanding has real-world implications, such as in self-driving cars and industrial robots. Without the ability to reason about cause and effect, these systems may struggle to predict and respond to potential accidents or consequences of their actions. However, Tenenbaum and his team have developed a new type of AI system that combines multiple techniques, including deep learning for object recognition and software for creating 3D models of scenes and object interactions, to improve the accuracy of causal reasoning.

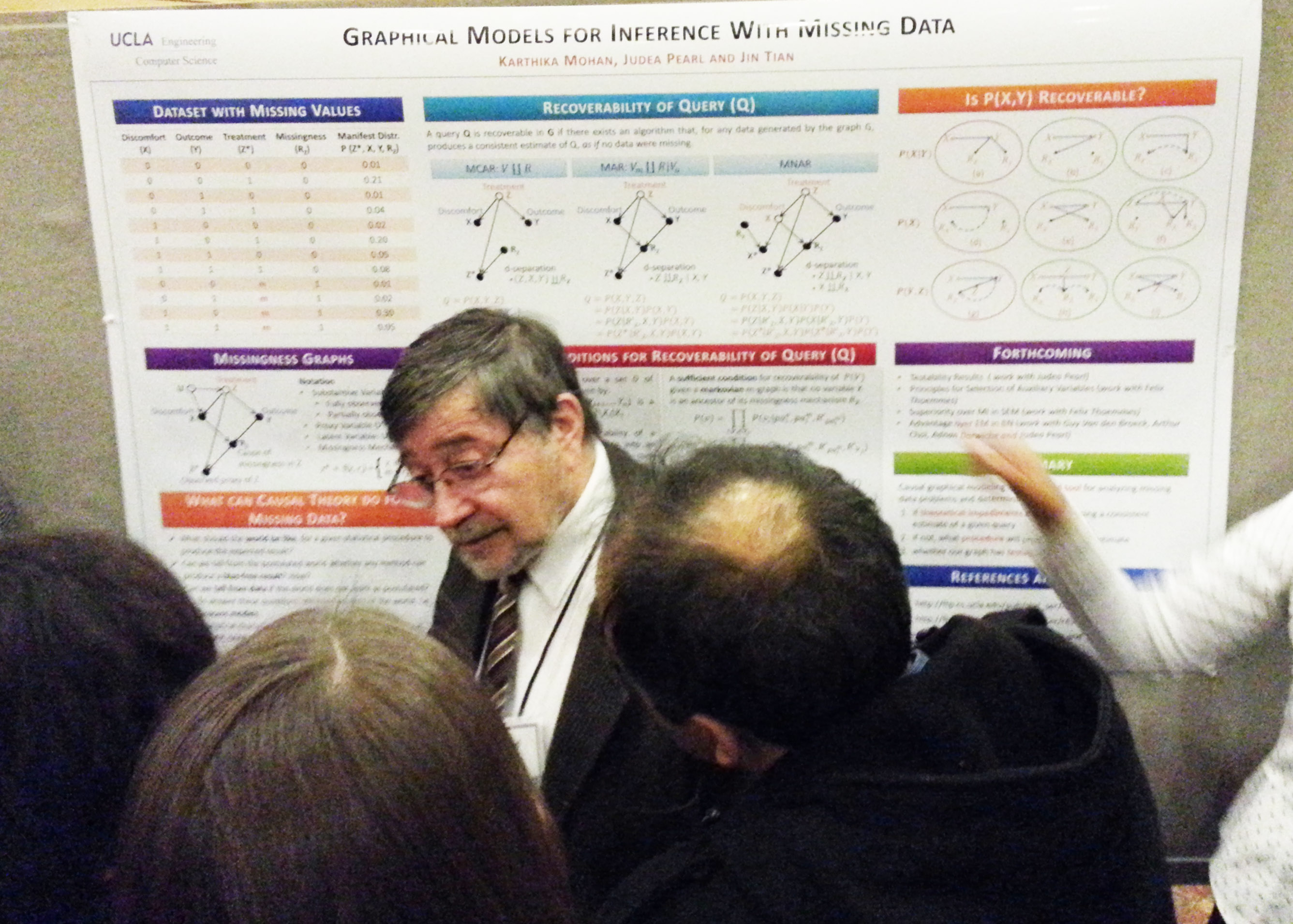

While this approach shows promise, it requires more manual steps and is currently unreliable and difficult to scale. Nevertheless, experts believe that incorporating causality and representation learning techniques will be crucial for the advancement of AI systems. Many prominent AI figures emphasize the importance of developing AI systems that can not only learn from data but also reason about cause and effect, similar to how human minds build causal models to respond to arbitrary queries.

The experiment conducted by Tenenbaum and his team sheds light on the limitations of current AI systems and the need for further research and development to enable AI to emulate the nuanced capabilities of human intelligence. Understanding cause and effect is essential for AI systems to make informed decisions and predictions, and without it, they may draw incorrect conclusions or make flawed recommendations. As the field of AI continues to evolve, the integration of causality and reasoning into AI models may pave the way for more advanced and human-like AI systems.