Generative Agents: Simulating Human Behavior with AI

Explore the cutting-edge world of generative agents, where artificial intelligence simulates human behavior to unlock new possibilities in healthcare, entertainment, education, and more.

In the realm of AI image generation and computer vision, image segmentation plays a critical role.

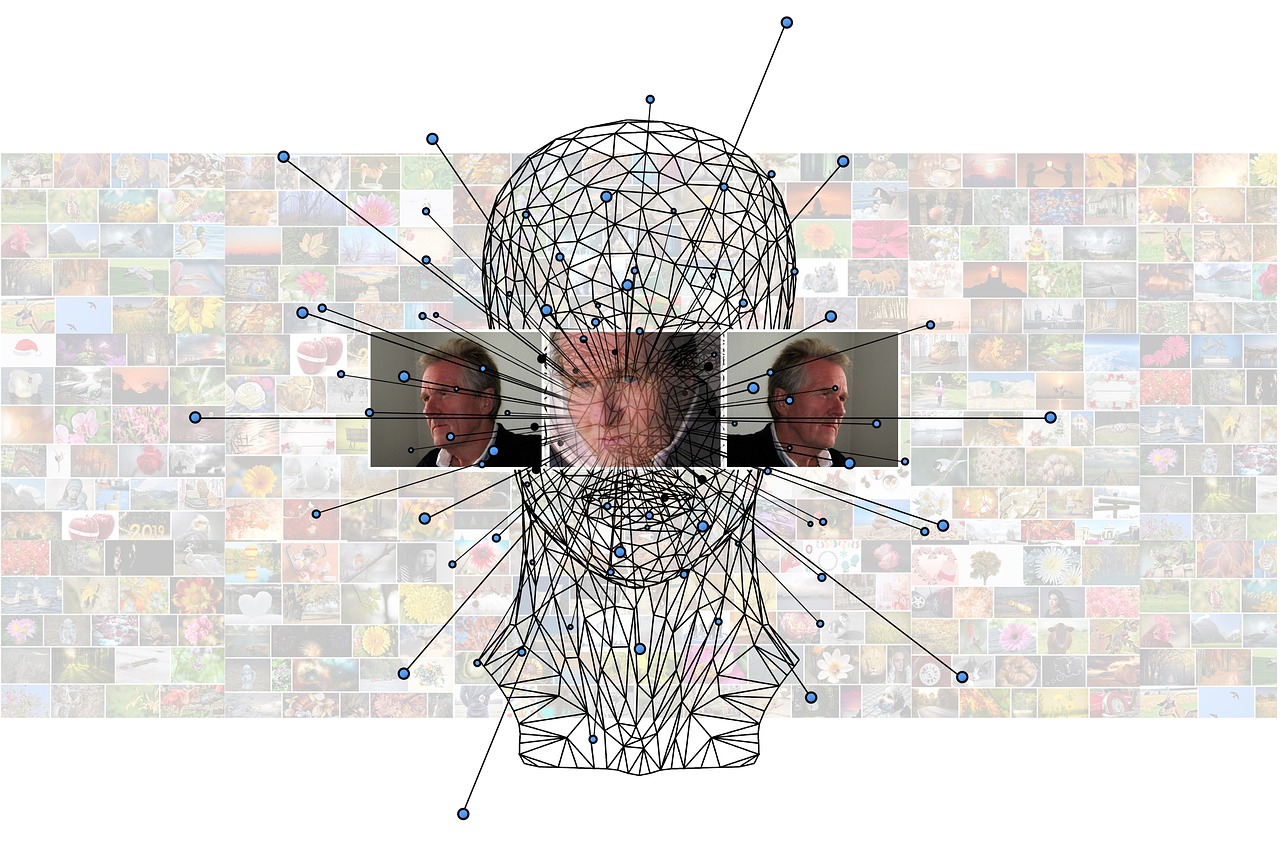

In the realm of AI image generation and computer vision, image segmentation plays a critical role. It's the process of partitioning a digital image into multiple segments or sets of pixels to simplify its representation. Enter "Segment Anything" by Meta AI, a groundbreaking project that's pushing the boundaries of image segmentation.

Meta AI has unveiled the Segment Anything Model (SAM), a revolutionary approach to image segmentation. SAM is designed to be a promptable model, capable of performing both interactive and automatic segmentation. This versatility allows SAM to handle a wide range of segmentation tasks by simply engineering the right prompt for the model, such as clicks, boxes, or text. The model's ability to generalize to new types of objects and images beyond its training data reduces the need for practitioners to collect their own segmentation data and fine-tune a model for their specific use case.

SAM stands out for its flexibility and efficiency. Users can segment objects with just a click or by interactively clicking points to include and exclude from the object. The model can also be prompted with a bounding box, and it can automatically find and mask all objects in an image. SAM can generate a segmentation mask for any prompt in real time after precomputing the image embedding, allowing for real-time interaction with the model.

Under the hood, SAM uses an image encoder to produce a one-time embedding for the image, while a lightweight encoder converts any prompt into an embedding vector in real time. These two information sources are then combined in a lightweight decoder that predicts segmentation masks. After the image embedding is computed, SAM can produce a segment in just 50 milliseconds given any prompt in a web browser.

To train SAM, Meta AI constructed the largest segmentation dataset to date, known as the Segment Anything 1-Billion mask dataset (SA-1B). This dataset contains over 1 billion masks on 11 million licensed and privacy-respecting images. The data was collected using SAM, with annotators interactively annotating images and then using the newly annotated data to update SAM in turn. This iterative process helped improve both the model and dataset. The final dataset includes more than 1.1 billion segmentation masks, making it 400 times larger than any existing segmentation dataset.

The flexibility of SAM and the vast SA-1B dataset open up numerous possibilities for AI image generation and segmentation tasks. With prompt engineering techniques, the SAM model can be used as a powerful component in various domains, including augmented and virtual reality (AR/VR), content creation, scientific research, and more general AI systems. Meta AI aims to facilitate further advancements in segmentation and image and video understanding by sharing their research and dataset, enabling a single model to be used in a variety of extensible ways.

The introduction of Segment Anything by Meta AI marks a new era in image segmentation. The combination of the SAM model and the SA-1B dataset offers unprecedented flexibility and scalability for segmentation tasks, promising to revolutionize how we approach image and video understanding in various applications. As this technology continues to evolve, we can expect to see even more innovative uses of AI image generation and segmentation in the future.

For more information, you can explore the official Segment Anything website and the GitHub repository for the project.