The Rise of Artificial Intelligence: A Glimpse into the Future

Artificial Intelligence (AI) is a rapidly evolving field that is transforming the way we live and work.

Nvidia is one of the most pivotal companies in the world today. There’s a popular belief that their success is due to pure luck—always having the right product at the right time.

Nvidia is one of the most pivotal companies in the world today. There’s a popular belief that their success is due to pure luck—always having the right product at the right time. But if you dive into their history, it becomes clear that Nvidia excels at fierce competition, learning from failures, and perfectly “riding the waves” of technological trends. Let’s break down how they do it.

Nvidia has surpassed Saudi Aramco in market value, trailing only Microsoft and Apple. While Microsoft has become deeply dependent on Nvidia’s chips during the AI boom (and is now rushing to develop alternatives), Apple moved away from Nvidia chips in the 2010s. Yet, Nvidia may still have a chance to challenge even this tech behemoth.

You might ask, “Why call Nvidia the most important company of our future?” The answer is simple: Nvidia sells the proverbial “shovels” to today’s digital gold rush. That’s one of the most reliable and sustainable business strategies, regardless of the era or context.

There’s a recurring narrative when discussing Nvidia:

“They just happened to have the right product at the right time. They’re just incredibly lucky.”

But if a company consistently develops market-defining products at exactly the right moments, it’s not just luck—it’s a testament to a formidable strategy and visionary leadership.

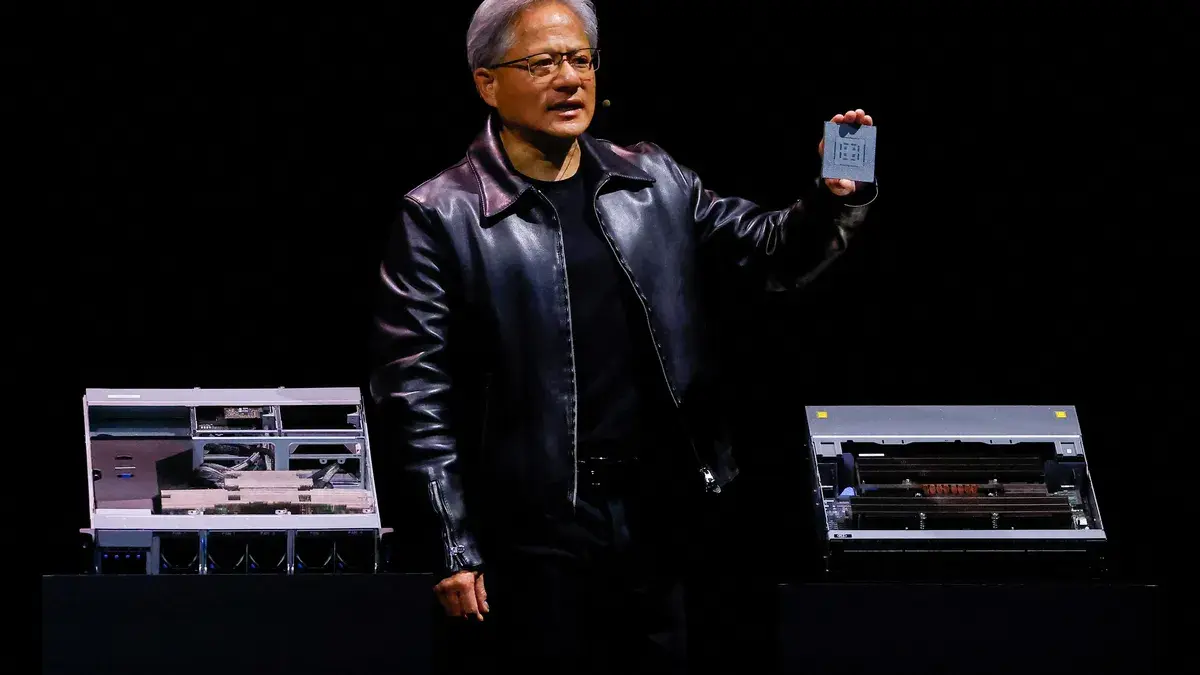

In this piece, I’ll do more than recount Nvidia’s development milestones. I’ll explore how CEO Jensen Huang and his team have made such precise and impactful strategic bets. Along the way, I’ll explain what products Nvidia creates that maintain steady demand across diverse industries and market segments.

Nvidia’s story is a long and dazzling journey filled with brilliant business decisions. To do it justice, I’ll split this material into two parts. Today, we’ll look at how Nvidia grew from a small “startup in a café” to a leading hardware producer for modern technological fields. In the second part (coming soon), we’ll examine how Nvidia transformed from a significant player to a future-defining company that might soon become the most valuable corporation in history.

Many of you might have heard the story of how Jensen Huang, Chris Malachowsky, and Curtis Priem sat at a table in a cheap café in San Jose, brainstorming what technology could become the next big thing. There’s even a tale about how the café was in such a rough neighborhood that the walls were riddled with bullet holes—a nod to the scrappy startup spirit.

However, the reality is that all three founders were already seasoned professionals by the time they began Nvidia. Jensen Huang had been a division director at LSI Logic, a major integrated circuit manufacturer, while his two partners were engineers at Sun Microsystems (later acquired by Oracle). In short, they weren’t college dropouts starting a garage company—they were experienced experts ready to disrupt the industry.

The trio agreed that computing was on the brink of expansion, with machines soon handling increasingly complex tasks. To meet this demand, central processors (CPUs) would need support—enter hardware acceleration.

In essence, CPUs are the "brain" of a computer, processing signals and distributing tasks. But like a human brain given 10–20 simultaneous tasks, CPUs can overheat and slow down when overwhelmed. Hardware acceleration acts as auxiliary "mini-brains," offloading work from the central processor.

Without these accelerators, we wouldn’t be able to simultaneously run multiple browser tabs, Excel, Photoshop, Telegram, and a game on our laptops.

Jensen, Chris, and Curtis were convinced that hardware acceleration was the future. They decided to focus on gaming, particularly the rapidly evolving 3D graphics segment. Advanced graphics were computationally intensive, making this a natural target for specialized GPUs (graphics processing units).

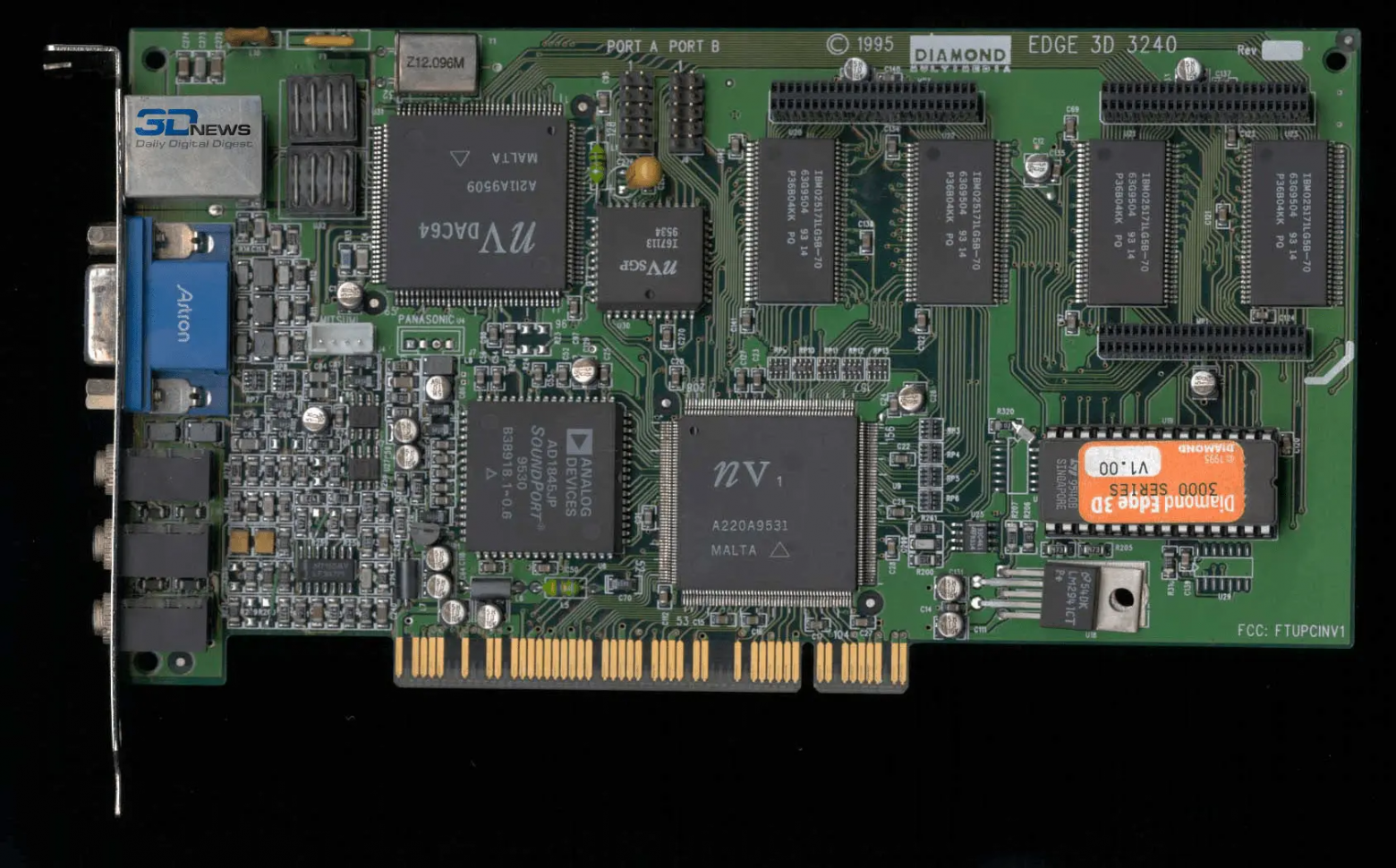

In 1995, Nvidia launched its first product: the NV1 multimedia graphics card.

The NV1 was innovative for its time, combining modules for 2D graphics processing, 3D acceleration, a sound card, and even a Sega Saturn gamepad port on a single board. Nvidia collaborated with Sega, allowing the porting of console exclusives to PC.

However, Nvidia was a fabless company, meaning it didn’t manufacture its own chips. Instead, it focused on design and contracted production to third-party manufacturers. For the NV1, the chips were produced by SGS Thomson-Microelectronics in France. Today, Nvidia still relies on external manufacturing, primarily through Taiwanese companies.

Despite its innovation, the NV1 was a commercial failure. Why? It was heavily optimized for Sega consoles at a time when PC gaming was booming, primarily on Microsoft’s operating system. When the NV1 launched in May 1995, Microsoft released its DirectX API just four months later.

DirectX revolutionized game development by enabling developers to utilize hardware without writing specialized code for each component. Unfortunately, the NV1’s graphics acceleration principles were incompatible with DirectX. This rendered it unusable for most PC games of the era.

Nvidia had poured almost all its initial funding—$10 million—into the NV1. The failure forced Jensen Huang to cut half the workforce, leaving Nvidia with just enough cash to pay salaries for one more month. The company adopted a grim motto: “We have 30 days to stay in business.”

The NV1 failure was a harsh but formative lesson. Nvidia realized the importance of aligning with emerging market standards, particularly in the PC segment. They also learned the value of agility in adapting to new trends—a principle that would guide their future success.

The myth that Nvidia invented video cards isn’t true. The first 3D graphics adapter was created by IBM back in 1982. Later, other companies entered the market with their versions, but early models were expensive, underpowered, and largely niche products.

Mass-market, affordable, and versatile 3D graphics cards emerged in the mid-1990s. In 1995, IBM released the first mainstream example, followed by S3 Graphics with its S3 ViRGE chipset. Companies like Matrox and Yamaha also joined the race, making the market increasingly competitive.

In 1996, 3dfx released its Voodoo Graphics 3D accelerator, which became a game-changer. Specializing in arcade-style performance, Voodoo cards delivered unprecedented rendering speed and quality, quickly becoming a favorite among gamers and developers.

Quake, one of the most iconic games of the era, showcased the capabilities of Voodoo hardware. With superior textures and rendering speed, Voodoo cards were the benchmark for top-tier gaming.

While Voodoo2 maintained its dominance into 1998, Nvidia launched a new product: the Riva TNT (NV4). Unlike Voodoo, which required an external video card for 2D rendering, the Riva TNT offered an integrated solution, simplifying the setup for users. It was also more affordable, appealing to the rapidly growing mid-market segment.

This strategy marked a turning point. By offering a product that was cheaper, easier to use, and good enough for most gamers, Nvidia began eating into 3dfx’s market share.

Jensen Huang’s team adopted a strategy of minimizing development cycles. While 3dfx took years to develop new chipsets, Nvidia quickly released updated models, continuously improving performance and affordability. This agility allowed Nvidia to capture market segments that 3dfx couldn’t adapt to fast enough.

Nvidia also reduced defective chip rates by integrating a robust quality control process, further enhancing its reputation among manufacturers and consumers.

In 2002, Nvidia decisively ended its rivalry with 3dfx by acquiring the company for $70 million. Nvidia absorbed 3dfx’s patents and technology, solidifying its position as the leading graphics card manufacturer. This marked the company’s first major triumph, setting the stage for further dominance.

By the early 2000s, the graphics card market had narrowed to three key players: Nvidia, Intel, and ATI. Intel focused on integrated graphics, leaving Nvidia and ATI to compete in the discrete GPU market. ATI’s Radeon series emerged as Nvidia’s primary competitor, sparking a rivalry that persists to this day.

To secure its position, Nvidia forged partnerships with major companies:

Additionally, Nvidia began acquiring smaller companies to expand its technological base:

In 2006, AMD acquired ATI, creating a combined entity to compete with both Nvidia and Intel. While this merger made sense strategically, it caused AMD to lose contracts with Intel, giving Nvidia an opening to capture new deals. There’s even a rumor that AMD initially approached Nvidia for acquisition, but Jensen Huang declined, foreseeing greater opportunities as an independent company.

In 2007, Nvidia launched CUDA (Compute Unified Device Architecture), a software platform enabling developers to use Nvidia GPUs for general-purpose computing. CUDA allowed developers to leverage GPU power for mathematical computations, algorithms, and advanced technologies like machine learning.

CUDA’s support for legacy languages like Fortran helped Nvidia penetrate high-performance computing markets. By enabling AI researchers and engineers to work seamlessly on Nvidia hardware, CUDA laid the foundation for Nvidia’s future dominance in AI.

In 2012, the AlexNet neural network achieved record-breaking image recognition accuracy at the ImageNet Large Scale Visual Recognition Challenge. It trained on Nvidia GPUs using CUDA, proving that GPUs were ideal for AI workloads. This pivotal moment cemented Nvidia’s role as a key player in the AI revolution.

As the 2010s began, Nvidia faced new challenges:

Nvidia responded with aggressive diversification: